In our “Engineering Energizers” Q&A series, we shine a spotlight on the innovative engineers at Salesforce. Today, we feature Prithvi Krishnan Padmanabhan, a Distinguished Engineer with over a decade of experience at the company. Prithvi spearheads the cross-cloud architectural vision for Agentforce, a versatile and enterprise-ready platform that powers AI-driven agents across various workflows and products.

Discover how his team tackled the complexities of reasoning across highly customized orgs, addressed latency and scalability issues at both the user experience and infrastructure levels, and maintained trust and cohesion while rapidly deploying features across multiple clouds.

What’s your mission as it relates to Agentforce?

My role at Salesforce spans the entire AI Cloud stack, from Agentforce and Prompt Builder to the retrieval systems within Einstein for Data Cloud. As a Distinguished Engineer, my focus isn’t limited to a single product. Instead, I act as a conductor, ensuring that these systems, often developed by separate teams, work together seamlessly to create a cohesive and unified experience for our customers.

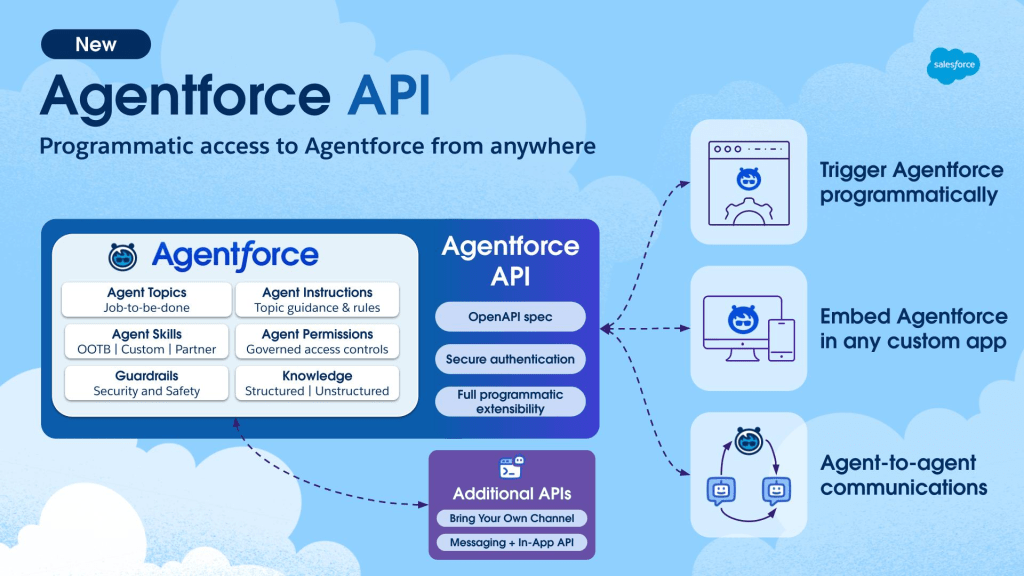

With Agentforce, my mission is to build a general-purpose agent platform that is open, extensible, and customizable. This platform is designed to support CRM use cases but isn’t limited by them. From the very beginning, our goal has been to create a platform that allows customers to bring their own models, integrate them with our existing trust and metadata infrastructure, and still receive first-class support from the rest of the system.

When you bring in a custom model, Agentforce automatically activates all other layers — deployment, trust, and data governance — without the need for any retrofits. This architectural flexibility is fundamental to our approach. It enables customers to transition from AI experiments to real, enterprise-scale production agents without any compromises.

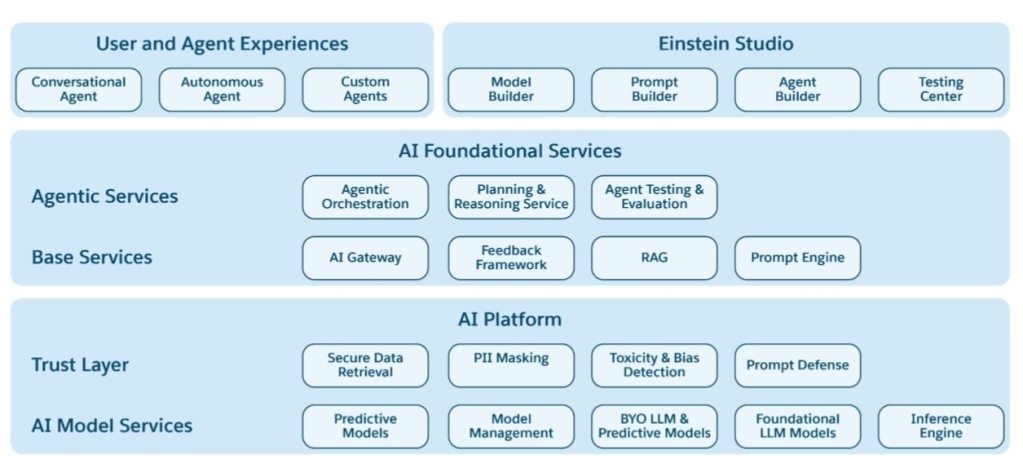

A look at Agentforce’s high-level architecture.

What was the most significant technical challenge encountered during the development of Agentforce?

In the early stages, Agentforce used a sequential planning reasoner and was primarily designed to support internal employee workflows. We initially assumed that a generic, one-size-fits-all reasoning engine would suffice for customer orgs. However, this assumption quickly proved incorrect. Most Salesforce customers operate in highly customized environments with unique object models, business logic, and metadata configurations. The generic design failed to deliver meaningful results because the reasoning engine lacked the necessary awareness of org-specific semantics.

For instance, the summarizeRecord function, which seemed flexible in theory, produced inconsistent or unusable outputs when customers tried to use it. A query like “summarize all opportunities for Acme” often resulted in inaccurate or irrelevant information. The root cause was an overgeneralized reasoning structure and a lack of context sensitivity.

To address this, our engineering team deconstructed the monolithic reasoning model into granular, composable components. This redesign introduced the Atlas Reasoning Engine, which allows customers to dynamically chain together their own constructs. The modular design enables each org to tailor reasoning flows to their specific business processes, significantly enhancing accuracy and adoption.

What R&D efforts are driving innovation in Agentforce’s reasoning and orchestration capabilities?

Current research and development efforts within Agentforce are focused on three key areas:

Determinism based on Variables: This ensures that specific actions are always executed in a prescribed order based on variables that can be set by the actions that cannot by large language models (LLMs). For example, order-related agents must consistently verify the user before disclosing sensitive data by setting is verified variable. While LLMs can be guided to follow such rules, deterministic control based on instructions however it does not guarantee this behavior, which is crucial for enterprise-grade reliability and compliance.

Pluggable Reasoners: This feature allows teams or customers to replace the default Atlas Reasoner with custom-built reasoning engines tailored to specific use cases. For instance, some workflows might benefit from lightweight planners that show a plan preview before execution, while others may require more complex reasoners for deep research or batch processing.

Multi-Agent Orchestration: This introduces support for coordination between multiple autonomous agents. The current Agentforce architecture already provides basic support for this. Future developments will incorporate standard protocols like A2A (Agent-to-Agent) and MCP (Multi-Component Protocol) to enhance orchestration capabilities across complex, interdependent workflows.

How are scalability and latency challenges addressed within Agentforce?

Agentforce tackles performance with two key strategies:

Optimizing Perceived Latency: Time to First Token (TTFT) is a critical UX metric. Users expect immediate feedback, even if complete responses take several seconds. Agentforce achieves this by showing incremental updates like “calling action,” “retrieving data,” and “executing step.” These UI signals create an illusion of immediacy, keeping users engaged during complex processes.

Reducing Actual System Delay: On the backend, the focus is on prompt compaction and efficient context selection for LLM interactions. By trimming unnecessary tokens and isolating relevant data, roundtrip latency is reduced without compromising output quality. Smart recommendations also play a role. When a user selects a pre-generated suggestion, such as “summarize these opportunities,” the system skips the reasoning pipeline, eliminating multiple planning stages and significantly cutting response time.

These optimizations ensure scalability without sacrificing reliability or flexibility, even in large, multi-object organizations.

What mechanisms enable rapid delivery of Agentforce features while maintaining enterprise-level trust and quality?

Agentforce employs a progressive rollout strategy to ensure fast and low-risk feature deployment. New features start with a small group of trusted customers who test them in sandbox or production environments. If everything checks out, the rollout expands. If issues are found, the system can be quickly and seamlessly rolled back. The release schedule is tailored to different components:

- Patch Updates: Every two weeks

- New Features: Monthly

- Critical Subsystems (like the Atlas Reasoning Engine): Every other day

Each release goes through rigorous testing, including soak testing and real-time observability analysis. This approach maintains high quality and allows AI Cloud to stay agile and responsive to market changes, all while upholding Salesforce’s reputation as a reliable enterprise platform.

What safeguards are in place to prevent regressions as Agentforce evolves across multiple teams?

Agentforce’s development process covers a range of product lines, including Data Cloud, Prompt Builder, EDC, and the core Platform. To avoid regressions, the team uses a multi-stage review and coordination process.

Virtual Architecture Team (VAT) Sessions: These sessions act as the first checkpoint. Product teams present their designs to a panel of senior engineers who ensure architectural cohesion. Any issues with interface definitions, system dependencies, or design assumptions are caught and resolved early, before development moves forward.

Monthly Tech Summits: These summits provide a second layer of review. They bring together stakeholders from all relevant teams to discuss in-progress features, interdependencies, and upcoming releases. Any misalignments or missing integrations are identified and must be fixed before the launch.

By implementing these practices, Agentforce ensures a stable integration surface and promotes a shared understanding of the architecture. This approach allows the platform to scale efficiently across hundreds of engineers while maintaining its integrity.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.